Understanding Modern Cloud Architecture on AWS: Storage and Content Delivery Networks

Table of Contents

- Introduction

- Simple Storage Service, aka S3

- Content Delivery Networks, aka CDNs

- AWS CloudFront

- Final Thoughts

- Watch Instead of Read on Youtube

- Other Posts in This Series

Introduction

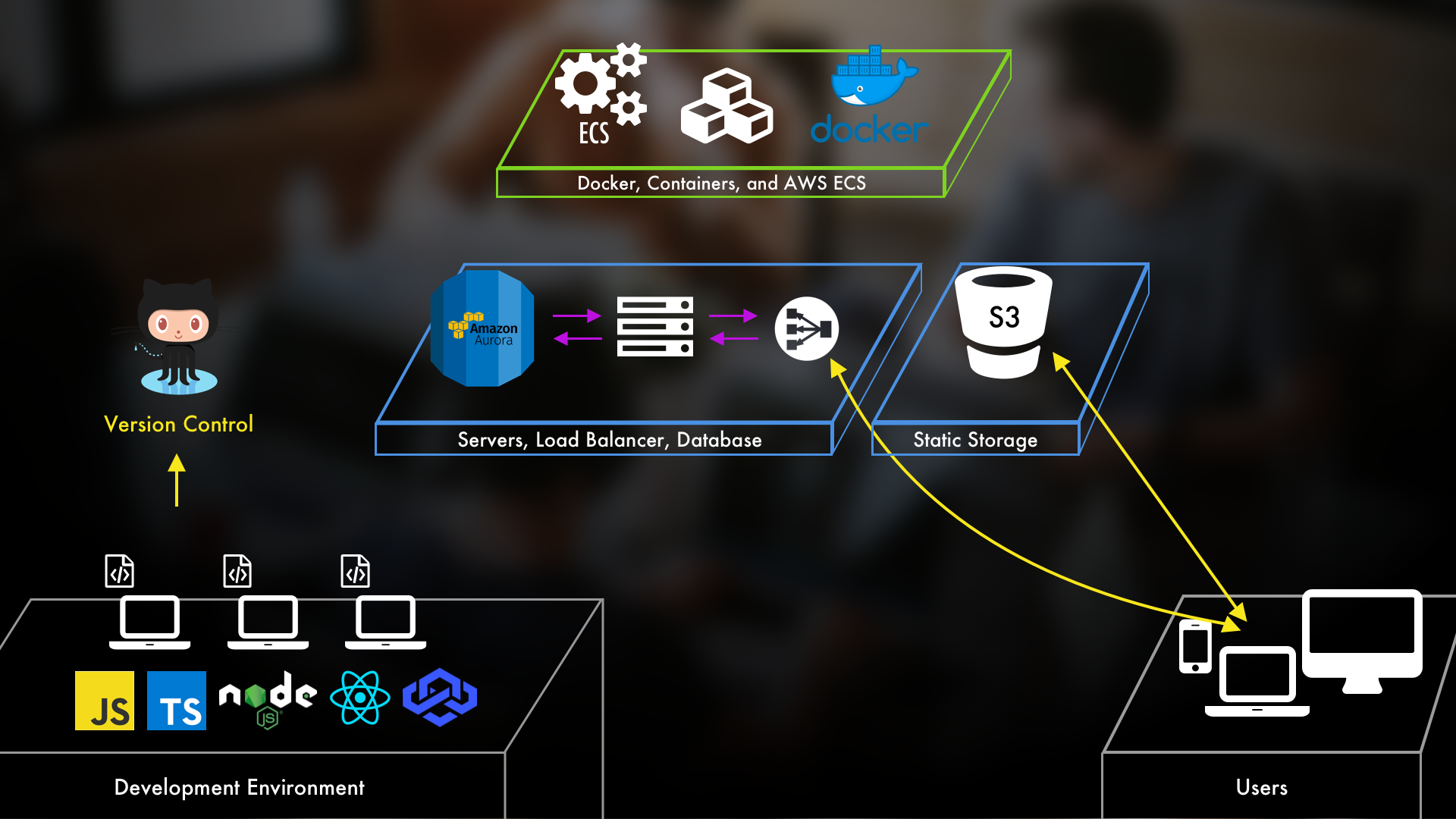

The architecture we've built is now in a great place. We've got a way forward when we want to add new services and when/if we want to scale things out further. Now we'll move into looking at the pieces beyond this core architecture by asking this question: how to make the most of what we've got and how to do so while making a better experience for our users?

(If you missed part five on Container Orchestration with ECS, you can read it here.)

On this topic, there are a TON of things that could be done to answer those how's. In this post we'll examine one of the options by discussing AWS's Simple Storage Service (S3) and their CloudFront Content Delivery Network. In a nutshell: S3 is where you can store static files, control permissions, and access to them. And CloudFront...well, we'll get more into that soon.

Simple Storage Service, aka S3

Right from the get-go it's worth asking: how are these services helpful to us in the context the cloud architecture we've been building? First up, we've assumed that the applications we've launched into our architecture thus far are web applications. Because of that, they probably have all sorts of static files like images, CSS, and JavaScript.

As our architecture is right now, we're serving these files directly from our application...which means they're coming from the containers...which means they're using up resources on our host. Since those things don't change a lot, it's a massive waste of our host's resources to serve them every single time.

Furthermore, file access and delivery can really bog down your application depending on how much your application has to do it. And also, if our application keeps every single image, locally, that it needs to serve, our containers are going to be pretty big. Therefore there's less space on our instances for more containers since they're bloated with static files.

A very simple performance boost here would be to pull all of those static files out of our application and containers, and instead serve them over something like Simple Storage Service, also known as S3.

Now, when users go to interact with our application, they'll grab all of the static files from S3 (so HTML, CSS, Javascript, and the like). With that being the case, the containers on our servers can now worry about only sending and working with data. Without having to worry about sending our files and images, we've freed up resources and compute which means we can get even MORE out of our EC2 instances.

Content Delivery Networks, aka CDNs

Okay, so that's pretty simple, right? But if you've done anything with S3 before, then you know that buckets are locked to a particular region.

So let's say that the S3 bucket for your application is located in North Virginia. If your users are coming from somewhere far away like the United Kingdom, they've got quite the journey to take to get your files. This is where a content delivery network comes into play.

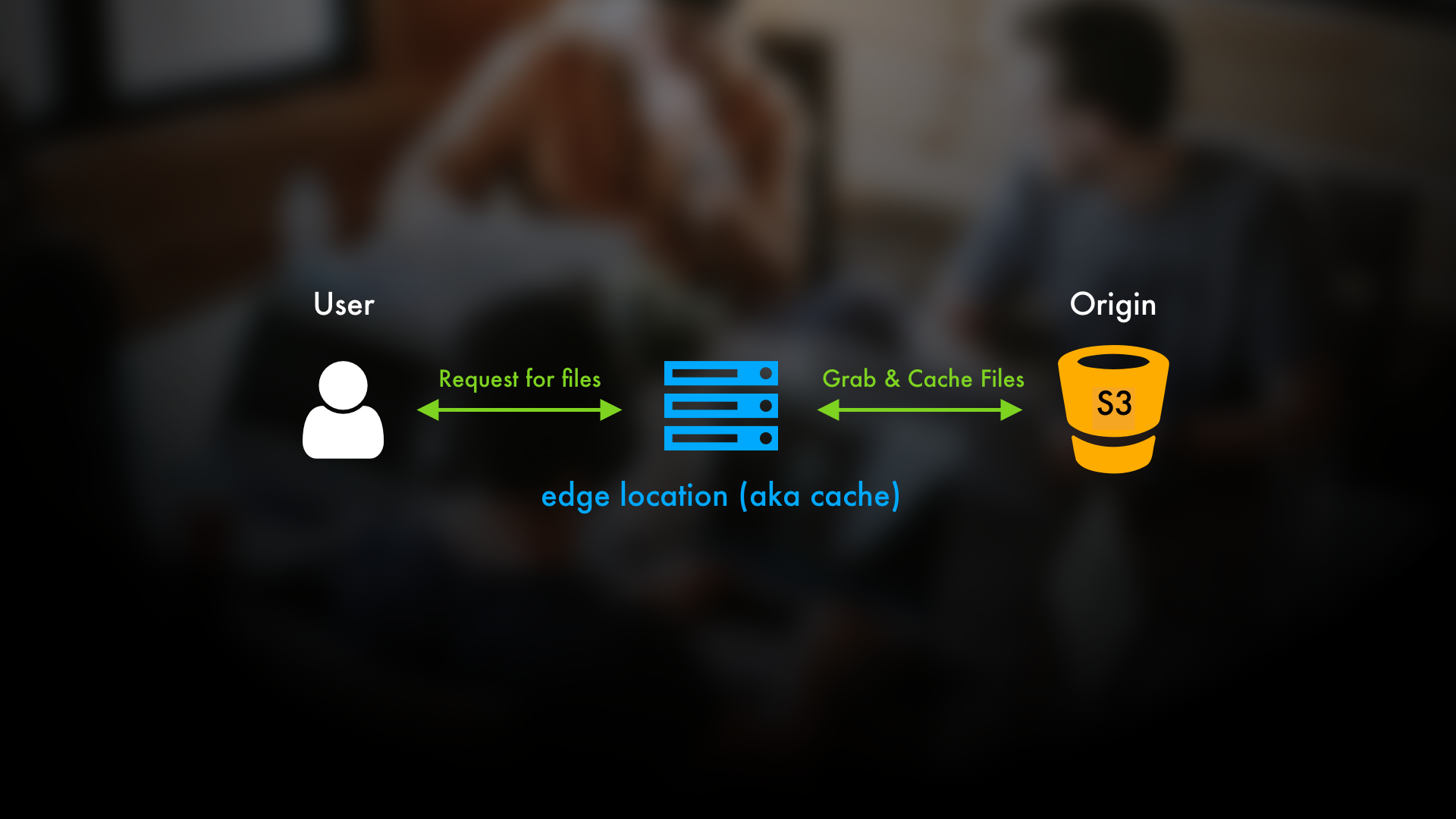

A content delivery network, also called a CDN, is going to take what's in your S3 bucket and make it available to other locations all over the world.

A content delivery network (CDN) is a network of servers and data centers distributed all around the world. A CDN takes a source, like an S3 bucket, and copies all of its files to all of the servers and data centers that belong to the CDN. When a user goes to make a request for something in your S3 bucket, they'll instead go to the closest CDN server and data center near them.

In plain english, when someone goes to grab something from your S3 bucket, instead of going directly to the bucket, they'll go to the CDN servers nearest to them instead of travelling all the way to where your S3 bucket is, which in this example is North Virginia. If the CDN servers haven't copied the files from the original S3 bucket yet, it'll do so then and cache them. Otherwise, it'll just hand them the files. Because the CDN servers are closer, it'll be far faster for the user.

AWS CloudFront

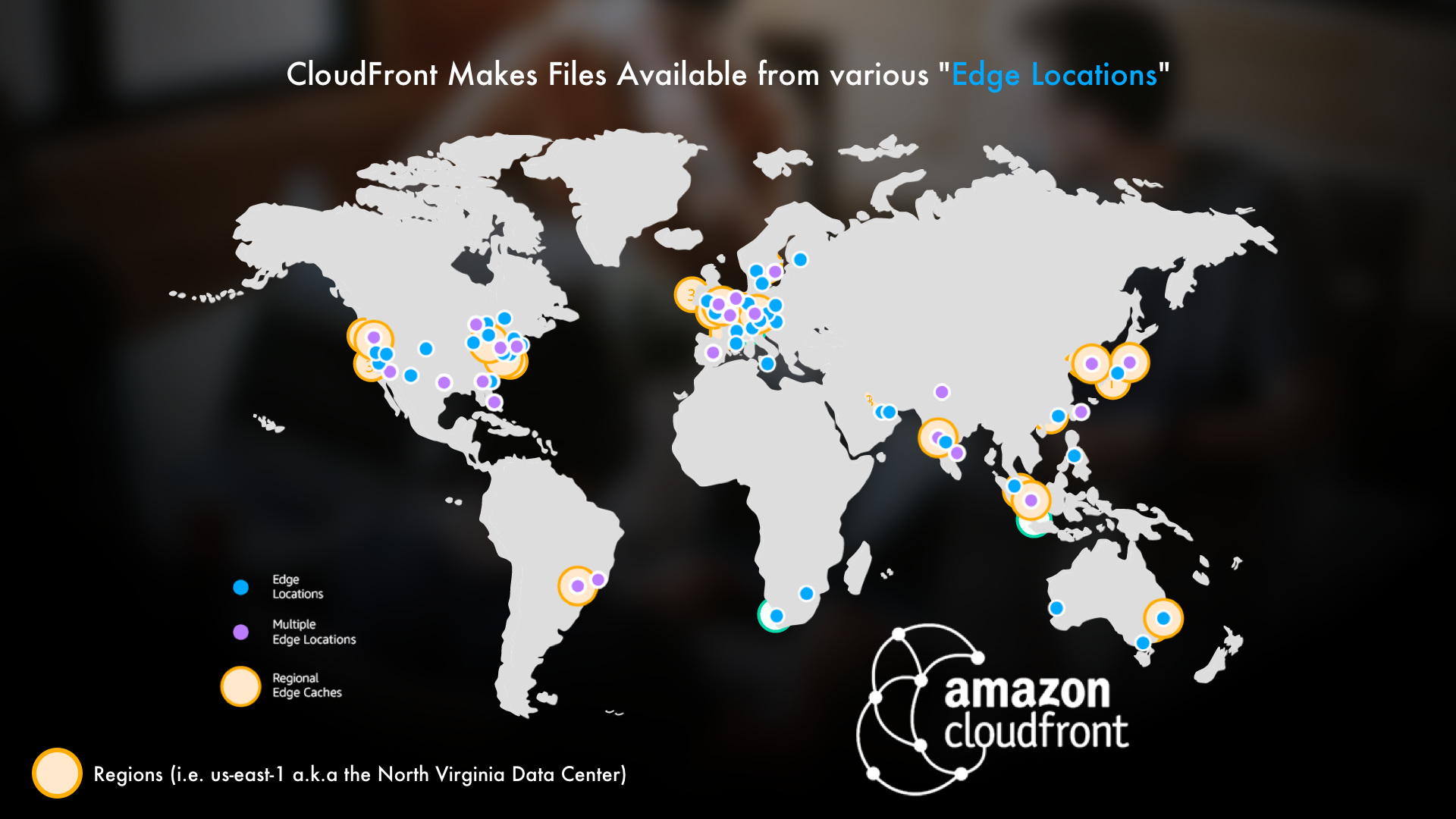

In AWS, CloudFront is their service for creating content delivery networks (CDNs). The various locations around the world that have servers and data centers that make up this content delivery network are known as "edge locations." There's over 200 of them! Looking at the map below, we can see that since these are global, we can make static files geographically closer to most end users. This means faster response times and all of the other benefits that comes with at.

To get this working in our current cloud architecture setup, all we do is tell CloudFront about our S3 bucket...and it'll make those files available to all of these different locations. It's really that simple. Using our same example from earlier, if your users in the United Kingdom want to get files and images from your S3 bucket they can just get them from locations closer to them instead of going all the way over to North Virginia.

I know what you're thinking, "How do we set that up?" When working with S3 normally, you just upload the files and then you point to the URL of the file. With CloudFront, the only extra step is just telling CloudFront about your S3 bucket and then using the CLOUDFRONT URL in your applications instead of the S3 one.

Final Thoughts

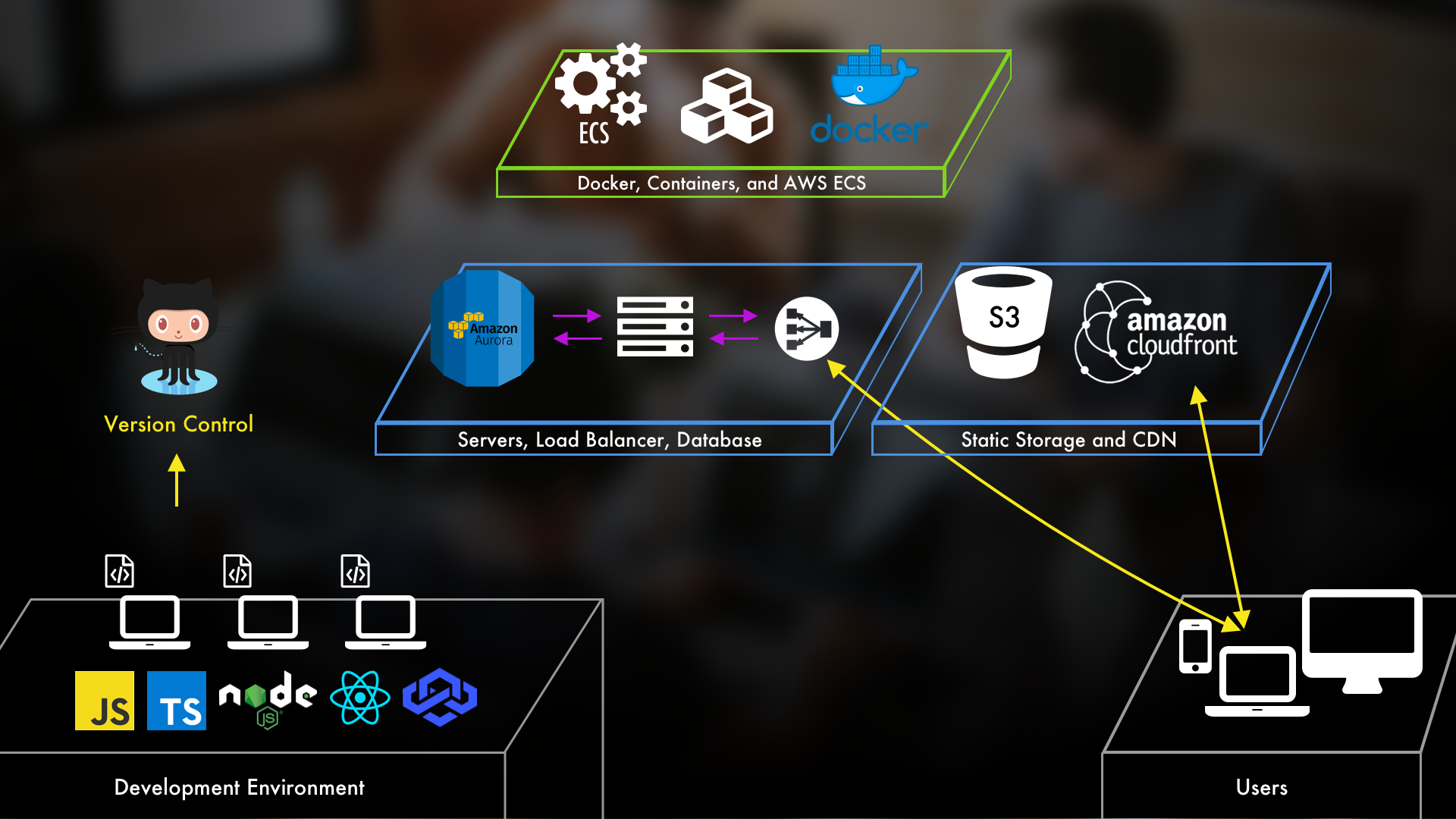

With that, our architecture is growing in sophistication in a great way:

When users come to our applications, they'll wind up getting their static files from CloudFront and the data from our actual applications in our containers on our EC2 instances. This is going to free up a lot of power on those instances so that we can make even better use of them. And, since the static files will be coming from locations close to the user their experience is improved as well.

But you can probably guess at the next issue we're going to face with this: how do we keep track of what's going on in our applications? And on our servers? What if errors start happening, how do we track those? We'll tackle all of that in the next post when we discuss Centralized Monitoring and Logging.

Watch Instead of Read on Youtube

If you'd like to watch instead of read, the Understanding Modern Cloud Architecture series is also on YouTube! Check out the links below for the sections covered in this blog post.

Video 1 - Storage and Content Delivery Networks (CDNs) for Your Modern Cloud Architecture (for sections 2-4)

Other Posts in This Series

If you're enjoying this series and finding it useful, be sure to check out the rest of the blog posts in it! The links below will take you to the other posts in the Understanding Modern Cloud Architecture series here on Tech Guides and Thoughts so you can continue building your infrastructure along with me.

- Understanding Modern Cloud Architecture on AWS: A Concepts Series

- Understanding Modern Cloud Architecture on AWS: Server Fleets and Databases

- Understanding Modern Cloud Architecture on AWS: Docker and Containers

- Interlude - From Plain Machines to Container Orchestration: A Complete Explanation

- Understanding Modern Cloud Architecture on AWS: Container Orchestration with ECS

- Understanding Modern Cloud Architecture on AWS: Storage and Content Delivery Networks

Enjoy Posts Like These? Sign up to my mailing list!

J Cole Morrison

http://start.jcolemorrison.comDeveloper Advocate @HashiCorp, DevOps Enthusiast, Startup Lover, Teaching at awsdevops.io