Understanding Modern Cloud Architecture on AWS: Docker and Containers

Table of Contents

- Introduction

- Problems with Hosting Directly on EC2 Instances

- Docker: The Problem Solver

- Containers

- Final Thoughts on Docker

- Watch Instead of Read on Youtube

- Other Posts in This Series

Introduction

The architecture of our conceptual application is in a pretty good place right now with all the pieces we prepared in the last post. Between an Auto Scaling Group, RDS Aurora Database, and a Load Balancer, many applications could just stop there. But, we're looking to go through all the important elements of cloud architecture, not just that bare minimum level. And so, while our fictional team is working hard to improve the application and bring in new users, we're going to pause and talk about Docker and Containers. Yes, yes, this topic has an insane amount of buzz surrounding it but...for good reason. It really can be as useful as they say.

(If you missed part two on server fleets and databases, you can read it here.)

Now before we dive in, just a disclaimer: we are not going to be exploring the ins and outs of Docker here. The first reason is that it's a HUGE topic and if we covered it in-depth we wouldn't get anything else done. The second reason is that I have an entire free video series called The Hitchhiker's Video Guide to AWS ECS and Docker that dives deep into the whole range of Docker and ECS. So if you want a more expanded look into it, definitely check that out. (And the graphics are pretty fun if I do say so myself.)

However, in this cloud architecture-focused series, we are going to cover the main concepts of Docker and Containers so that (a) you can see how it fits into the overall picture of creating a cloud infrastructure and (b) so that you ideate architectures with it for yourself in the future. So, first up, let's talk about how things are right now concerning our EC2 instances hosting our application.

Problems with Hosting Directly on EC2 Instances

Right now our applications are set up directly on the servers themselves and, when we're ready to share it with the world, we direct the traffic to a port on that server. Now, in our EC2 instance's case, this traffic is coming from a load balancer, but it still is going to a particular port on the instance.

Well...there's a few problems with this that aren't immediately obvious.

1) Our application can be affected by the server.

When you say that out loud, the first reaction is probably "Oh yeah, of course. Obviously." However, it becomes a much bigger problem down the line than at first glance. What happens if you need to do a major operating system upgrade? What happens if you need to update some packages on the host to newer versions? What happens if you want to change the operating system all together?

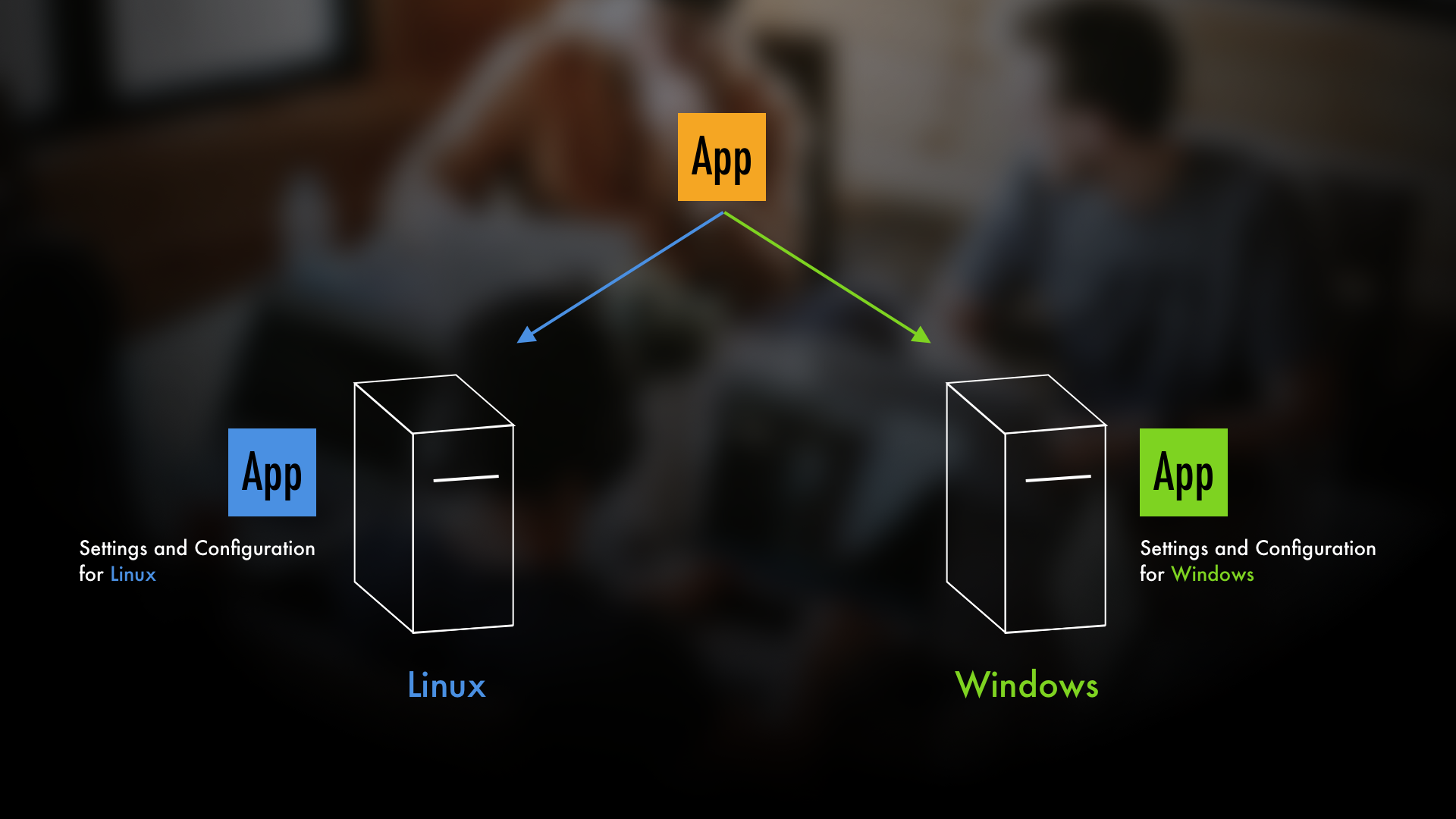

Well, you're going to have to keep tweaking your application to fit the particular hosting server. So, for example, the way you'd configure your application to run on an Ubuntu Linux EC2 instance is going be different than how you'd do it with Windows. And so, if you wanted to move your application from one OS to the other...suddenly you'd wind up managing different configurations of your application. You would have all the settings and details for when your application is on a Linux box and you'd have all the settings and details for when your application is on a Windows box. You wind up with two versions essentially, which isn't ideal.

2) Our application can be affected by other apps and services on the server.

So let's say you want to cram two different versions of Node.js onto your system. How do you manage the dependency differences?

Don't get me wrong, it's absolutely possible, but it can become pretty complicated to manage. If your application depends on different types of background services and different versions of those services...management becomes even more difficult. And what happens if the two applications you're running are both web services? Well, if both wanted to use port 80 for HTTP...and your server only has one port 80...what do you do? Of course you can go the route of something like a reverse proxy, but that won't solve all of the other problems.

3) Making the most out of your server is difficult.

What I mean by that is this: At any given time, whatever application you're hosting on your instance is probably not taking up ALL of the free space, memory, and CPU available. And so, you may wind up with all of these servers that have spare compute and memory that are just sitting there waiting on the off chance that you hit the front page of TechCrunch.

Well, given the scenario we're working with, what if our fictional company decides it wants to make a data processing application? Well, one option would be to just remake our architecture for this new application. But the more sensible option would be to put these new data processing applications on the servers we already have. This way we aren't wasting resources. But, we know that we have 2 really big barriers to this that were mentioned above:

a) Our application can be affected by the server.

AND

b) Our application can be affected by other apps and services on the server.

Again, both of these issues come down to the fact that our application may require different things than this new application does. This means more dependencies and potential conflicts on the hosting server. More dependencies leads to more needed space, management, and complexity...which leads to the dark side.

Docker: The Problem Solver

These are three major problems that can come with trying to deal with this process. For those of you who've been in this space and don't know about Docker, you might be reaching for virtual machines right now. And that's absolutely a solution...but they're far heavier than the trendy, new-age solution of Docker.

So, before we talk about how it solves these problems in particular, let's walk through what Docker is and what it can do for us. The general workflow is this:

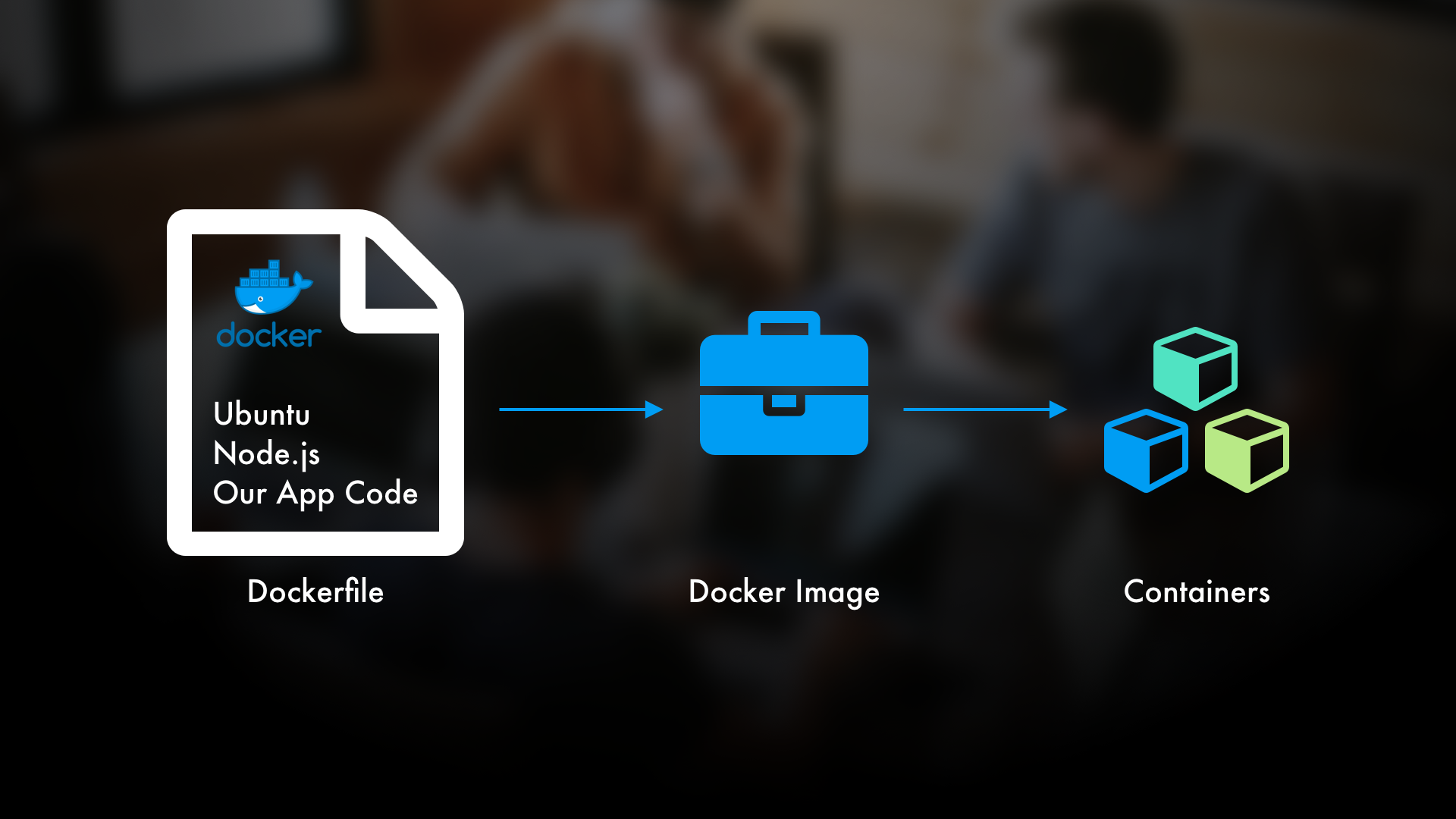

Make a "Dockerfile" that lists everything you want in your "Docker Image"

Make a "Docker Image" that will serve as a template for your "Containers"

Make your "Containers" from your "Docker Image" that will essentially be isolated mini-computers...on your computer.

So let's begin with Docker images. The easiest way to think of Docker images is that they're like templates for containers (which we'll talk about shortly). Obviously this is a bit simplified, but it's practical. When it comes to creating a Docker image, you begin with what's known as a Dockerfile. In the Dockerfile you list everything you want in your Docker Image and ultimately in your Docker container. So in this example, we want the Ubuntu operating system, the software for Node.js, and our application code.

Now immediately, you're going to notice that we can define what operating system we want. That's a great thing about containers, we can define whatever the heck we want in them. We'll talk about containers more shortly, but again, thinking of them as isolated mini-computers that we can run our code in is a good starting point. (Again, if this whole bit on containers is a bit fuzzy, don't worry. We'll talk about them more in just a minute.)

After we've made this Dockerfile, we then build a Docker image for it. That Docker image is now ready to be used for creating containers. Again, you can think of the Docker image like a template for containers, and this image is going to have all of this stuff ready for any containers that are made from it.

Containers

Okay, so now we get to to talk about the containers themselves. When we're ready, we can make containers from the Docker image we talked about above.

When you make a container, it's going to have ALL of those things in it that you defined in the Docker image. So, in our case, if we made three containers from this Docker image, they'd all have separate instances of Ubuntu, Node.js, and our application code.

The best part of this is that they're completely separated and isolated. They don't affect each other; therefore, creating containers is an extremely clean way to host multiple things on one machine. Granted our example is a bit simple here, but let's say we wanted three different applications on our one machine: a Node one, a Python one, and a Rails one. And let's say all of them are web services.

Maybe the Node container is our main one, our Python application is some administrative app, and the Rails one is a legacy app. Well, with containers, all three could easily coexist on the same machine without stepping on each other's toes. All three could have completely different operating systems and dependencies BUT (and here's the incredible benefit of Docker)...the only thing this host machine needs is Docker!

That's right. We don't have to worry about installing different versions of the dependencies and we don't have to worry about the version of our host's operating system. All we have to do is make sure that our host has Docker. That's it.

And now, if you wanted to host these containers on a Windows machine, or maybe move them to a Linux machine? No problem! Just make sure they have Docker and you're good to go. Oh, and if you want to update your host? Go for it, the containers are CONTAINED anyway so they won't be affected.

Thinking of Containers as "Mini-Computers"

There's a lot of nuts and bolts to containers that we won't dive into here, but thinking of them as "mini-computers" is a nice mental model. Much like with servers in our last post, it's easy to get intimidated by containers due to the perceived complexity that docs, marketing, and bad resources create.

To drive home how much these are like mini-computers, you can do the following:

(assuming you have Docker installed)

a) Make a Dockerfile that includes Ubuntu

FROM ubuntu:18.04 b) Create a Docker Image from that Dockerfile

# Assuming the Dockerfile is in the same directory

docker build -t first_ubuntu_image . c) Create a container from the image in (b) and dive into it

docker run -it first_ubuntu_image After (c) you'll notice that you're in another terminal...one for the container! If you run some basic Unix commands like ls, you'll notice a file system, and that you can pretty much treat it just like a separate computer. Why? Because we made a container that has Ubuntu 18.04 in it, and so that's what you're seeing.

Yes, this is simple, but it's the general workflow. The difference for, say a container to run an application, is requiring ALL of the things we need for that application instead of just an operating system like Ubuntu. Generally we'll also tell the image to run scripts and/or default commands.

Cole...this is a lot like Virtual Machines (VMs)

Indeed. We won't go into it, because as mentioned in the last post, these are their own informational rabbit hole. However, the main differences, in a nutshell, come down to ISOLATION and RESOURCE USAGE.

Yes, containers are isolated, but VMs take it to a different level. They literally have everything inside of them needed to be their own computer. On the other hand, containers have JUST ENOUGH to run what you want them to run. And so, this increased isolation for VMs comes with increased resource usage.

The way to think about it is to pretend that a server is like a house. Creating VMs would be like making mini-houses in the house. Creating containers would be like turning the entire house into an apartment building.

The point is that, due to containers being so much lighter, we can use them to make the most of our server's resources. In plain English, let's say we have 3 applications we want to put on one server. If we make 3 separate VMs for each of those applications, that will absolutely work, but it will use up a lot of our server's space and power since some VMs can be gigabytes in size. On the other hand, if we make 3 separate containers for each of the applications, we'll have far more space open, since they can be as small as a handful of megabytes.

But Cole, aren't there things other than Docker?

Of course! Docker is a "container runtime" which is the suit tie way of saying "runs containers." There are alternatives out there, but we stick with Docker for the same reason that, if this were a cooking class, I wouldn't drone on about all of the different brands of pots and pans. Yes, you can buy that iridium-plated frying pan for that "otherworldly" taste, but it's still a frying pan and we're still making burgers.

Final Thoughts

Now hopefully this points out why Docker, and containers, are so darn useful. Now we can make the most of our server's resources without worrying about the host machine itself. All we have to ask is: Does it have Docker? If it does, we can put it on there.

Now, Docker does a lot for us in the background. It manages the memory usage, CPU, the network accessibility...all sorts of things. It makes it so that working with these containers is a pleasure instead of a pain. I say that because if it's been a while since you've considered containers, and you're still thinking of LXC, well those were limited and not nearly as convenient to work with as Docker-based containers.

Okay, but how can this help us build our cloud architecture out even more? Well, we're going to pretend that our fictional company here has decided it wants to launch another application. And we'll deal with that demand in the next post by discussing Docker, Container Orchestration (ECS, EKS, Kubernetes, Docker Swarm), and how it can help us.

Watch Instead of Read on Youtube

If you'd like to watch instead of read, the Understanding Modern Cloud Architecture series is also on YouTube! Check out the links below for the sections covered in this blog post.

Video 1 - Docker & Containers for Your Modern Cloud Architecture on AWS (for sections 2-5)

Other Posts in This Series

If you're enjoying this series and finding it useful, be sure to check out the rest of the blog posts in it! The links below will take you to the other posts in the Understanding Modern Cloud Architecture series here on Tech Guides and Thoughts so you can continue building your infrastructure along with me.

- Understanding Modern Cloud Architecture on AWS: A Concepts Series

- Understanding Modern Cloud Architecture on AWS: Server Fleets and Databases

- Understanding Modern Cloud Architecture on AWS: Docker and Containers

- Interlude - From Plain Machines to Container Orchestration: A Complete Explanation

- Understanding Modern Cloud Architecture on AWS: Container Orchestration with ECS

- Understanding Modern Cloud Architecture on AWS: Storage and Content Delivery Networks

Enjoy Posts Like These? Sign up to my mailing list!

J Cole Morrison

http://start.jcolemorrison.comDeveloper Advocate @HashiCorp, DevOps Enthusiast, Startup Lover, Teaching at awsdevops.io